Inspired by @srtcd424’s approach at using an intermediate KV store (dunno who else suggested it), I went ahead and wrote another binary that generates that store from an existing /nix/store. It’s the mapper in nix-subsubd.

Mapper

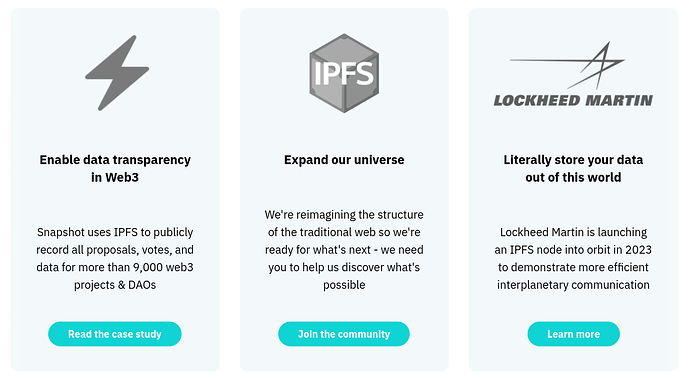

Right now nss-mapper works by grabbing the hash from /nix/store/$hash-$whatever, querying the binary cache (configurable but https://cache.nixos.org by default) for the .narinfo, downloading and streaming the .nar.xz to a “hasher” (configurable but IPFS at the moment), then writing it in a data structure to a directory dir/$shaFirstLetter/$sha.yaml.

My store has >30k files, and took 2 hours to get to 5k, so it’s not the fastest. That’s probably also the case because there are friggin .drv, .patch, and a bunch of other tiny files in the binary cache  .

.

Proxy

Anyway, the proxy is now called nss (short for nix-subsubd) and can use the KV store generated by the “mapper”. It introduces 2 concepts:

Mapper maps a SHA256 hashsum of a file in the KV store, which in turn contains the details necessary for the backend to retrieve the corresponding NAR. Both are traits / interfaces and thus the sky is the limit to what implementation can come.

Right now, there’s a FolderMapper which (and as I’m writing this I see how it can get confusing) uses the output nss-mapper, but if somebody fancies they could implement and SqliteMapper to query a DB. HttpMapper to query a service, SocketMapper to speak to another program over a socket, or whatever.

As for the backend, right now there’s an IpfsBackend to grab NARs from IPFS, but if somebody wants to they can implement a TorrentBackend to download torrents on the fly and stream the response, or HttpMirrorBackend to round robin on a list of HTTP/HTTPS mirrors, TahoeLafsBackend, S3Backend, or whatever you fancy implementing.

And yes, it does fallback to the binary cache if something fails.

Future

There’s a bunch of stuff missing, most notably documentation  The next month might get pretty busy, so I’m just dropping this here before I disappear for a while. Maybe somebody will want to try it out, add a new mapper, or a new backend, or whatever.

The next month might get pretty busy, so I’m just dropping this here before I disappear for a while. Maybe somebody will want to try it out, add a new mapper, or a new backend, or whatever.

What else is missing:

- automatic tests (coverage is low af)

nss-mapper is currently sync (could be async, but async rust streams cooked my brain)- smaller features like filtering

/nix/store input to nss-mapper

- a git repo with the output of

nss-mapper

- better logging throughout the project

- better error HTTP responses from

nss when turds hit the fan

- probably a bunch more stuff

Anyway, feel free to test, fork, contribute, give constrictive feedback, …

See y’all again in a month!

![]()